III-3 Scheduling of theCPU

CPU scheduling allows OS to decide which process in the ready queue should be allocated to the CPU. There are several scheduling algorithms.

III-3-1 First-come, first-served scheduling

The FCFS (First Come First Served) algorithm is the simplest scheduling algorithm. CPU is allocated to the first process that requires it. FCFS implementation is easily managed with a FIFO queue. When a process enters the queue of ready processes its PCB is chained to the tail of the queue. When CPU is free it is allocated to the process at the head of the queue. The running process is removed from the queue.

III-3-2 Scheduling the shortest job first

The SJF (Shortest Job First) algorithm is a non-preemptive algorithm. It selects the job (or process) whose processing time it assumes will be the shortest. In the event of a tie, the FCFS scheduling is used.

Originally implemented in a batch processing environment, the SJF algorithm was based on a user-provided time estimate to the submitted job.

The SJF was subsequently modified to be able to use short-term scheduling in an interactive environment. Its decision is now based on the estimation of the processor cycle. This can be calculated as a simple average of the last processor cycles.

The SJF algorithm favors short jobs over long ones. In the most extreme cases, the incessant arrival of short jobs can lead to the starvation1 of long jobs.

Note: Non-preemptive or cooperative scheduling was applied in batch systems where the process retained control of the CPU until it crashed or terminated.

On current interactive systems, preemptive scheduling is used. The scheduler can preempts a process before it crashes or terminates in order to allocate CPU to another process.

III-3-3 Scheduling of the shortest remaining time

The Shortest Remaining Time (SRT) algorithm is the preemptive version of SJF. Each time a new process is introduced into the pool of processes to be scheduled, the scheduler compares the estimated value of remaining processing time to that of the process currently being scheduled. If the processing time of the new process is lower, the process currently being scheduled is preempted.

Just like the SJF algorithm, the SRT favors short jobs. Long jobs, on the other hand, can be victims of starvation.

III-3-4 Round-robin scheduling

The Round-Robin (RR) algorithm was designed specifically for time-sharing systems. It is preemptive and similar to the FCFS algorithm. We define a small unit of time called a time slice or quantum which extends in nowadays OSs, between 10 to 100 milliseconds.

The queue of ready processes is treated as a circular queue. The scheduler cycles through the queue of ready processes, allocating CPU to each process for a time interval of up to one quantum.

To implement RR scheduling, we manage the queue of ready processes in FIFO. New ready processes are added to the back of the queue. The scheduler removes the 1st process from the queue, sets the clock to interrupt after a time slot, and dispatches2 the process.

The use of a very short quantum of time allows a very rapid response time. However, such quantum increases the number of switches between processes, resulting in a loss of efficiency.

III-3-5 Scheduling with priority

Priority scheduling requires that a priority value be assigned to each process. The selected process is the one with the highest priority. The FCFS algorithm is used in the event of a tie. Priority scheduling can be preemptive or not.

The process priority allocation principle varies greatly:

- the priority is based on the characteristic of the process (memory usage, I/O frequency),

- on the user who executes the process,

- usage costs or

- on a setting that the user or administrator can specify.

Some of the mechanisms produce priorities that vary dynamically (volume of execution time) while others are static (the priority associated with a user).

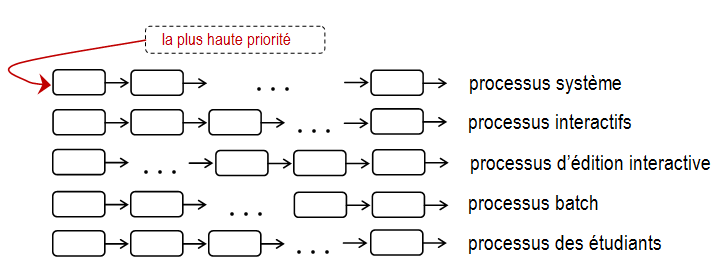

III-3-6 Scheduling with multi-level queues

The algorithms seen so far do not differentiate between processes; this type of algorithm splits the queue of ready processes into several separate queues. Each queue has its own scheduling algorithm.

For example, we could use separate queues for foreground and background processes. The 1st queue can be scheduled in RR and the 2nd in FCFS.

In addition, there must be scheduling between the queues, which is commonly implemented as scheduling with requisition and with fixed priorities. No processes in the low priority queue will be able to run unless the higher priority queues are empty.

Another possibility is to assign time slots to queues. Each process queue gets a certain portion of processor time that can be scheduled among the various processes that compose it.

III-3-7 Scheduling with multi-level feedback queues

In the previous algorithm processes are permanently assigned to a queue when they enter the system. However, this algorithm allows the process to move between queues. The idea amounts to separating processes with different CPU cycle characteristics. If a process uses a lot of CPU time it will be moved to a lower priority queue.

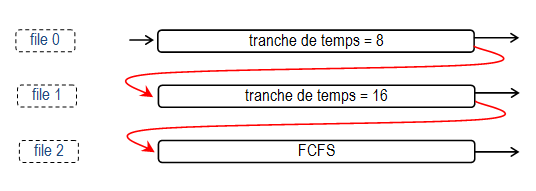

For example, consider a multi-level feedback queue scheduler with three queues numbered 0 to 2 (see figure below).

The scheduler first executes all the processes in queue 0. Only when it is empty will it execute the processes in queue 1. A process arriving at queue 1 will cause a process in queue 2 to be interrupted . A process entering the queue of ready processes will be inserted into queue 0.

This algorithm leaves I/O-dependent processes and interactive processes in the highest priority queue. A process that waits too long in a lower priority queue may be moved to a higher priority queue.

III-4 Thread

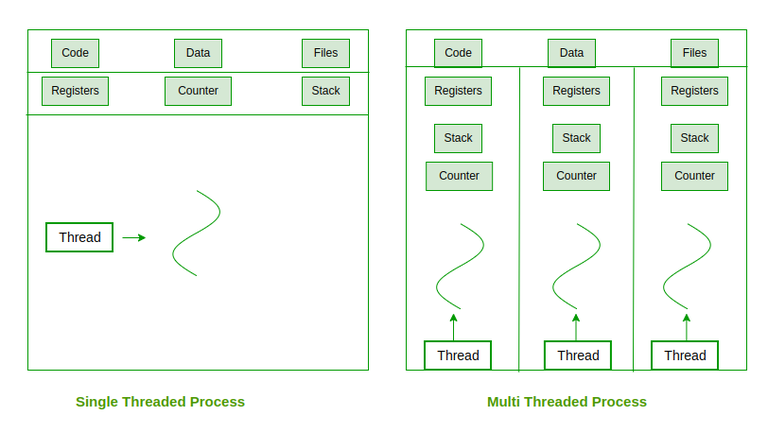

A thread is a single sequence stream within a process. Threads are also called lightweight processes as they possess some of the properties of processes. Each thread belongs to exactly one process.

- In an OS that supports multithreading, the process can consist of many threads. But threads can be effective only if the CPU is more than 1 otherwise two threads have to context switch for that single CPU.

- All threads belonging to the same process share – code section, data section, and OS resources (e.g. open files and signals)

- But each thread has its own (thread control block) – thread ID, program counter, register set, and a stack

- Any OS process can execute a thread. we can say that single process can have multiple threads.

III-4-1 Components of Threads

These are the basic components of the Operating System.

- Stack Space: Stores local variables, function calls, and return addresses specific to the thread.

- Register Set: Hold temporary data and intermediate results for the thread’s execution.

- Program Counter: Tracks the current instruction being executed by the thread.

III-4-2 Types of Thread in Operating System

Threads are of two types. These are described below.

- User Level Thread

- Kernel Level Thread

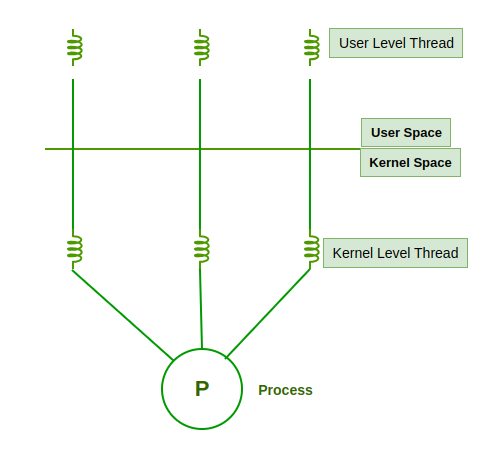

1. User Level Thread

User Level Thread is a type of thread that is not created using system calls. The kernel has no work in the management of user-level threads. User-level threads can be easily implemented by the user. In case when user-level threads are single-handed processes, kernel-level thread manages them. Let’s look at the advantages and disadvantages of User-Level Thread.

2. Kernel Level Thread

A kernel Level Thread is a type of thread that can recognize the Operating system easily. Kernel Level Threads has its own thread table where it keeps track of the system. The operating System Kernel helps in managing threads. Kernel Threads have somehow longer context switching time. Kernel helps in the management of threads.

III-4-3 What is Multi-Threading?

A thread is also known as a lightweight process. The idea is to achieve parallelism by dividing a process into multiple threads. For example, in a browser, multiple tabs can be different threads. MS Word uses multiple threads: one thread to format the text, another thread to process inputs, etc.

Multithreading is a technique used in OSs to improve the performance and responsiveness of computer systems. Multithreading allows multiple threads (i.e., lightweight processes) to share the same resources of a single process, such as the CPU, memory, and I/O devices.

---------------------------------------------

1 In the context of operating systems or computer systems, "process starvation" refers to a situation where a process is unable to execute or complete its tasks due to being deprived of necessary resources, such as CPU time, memory, or input/output (I/O) operations. This deprivation may occur due to resource contention, scheduling algorithms, or other factors that prevent the process from making progress.

2 Dispatches process = selects the process to be executed